A practical look at the tools reshaping how we create images, video, motion, and interactive media

Over the last few years, something subtle has happened inside creative teams.

The conversation has shifted from:

“What tools should we use?” to “How do we design systems that create content faster, smarter, and more consistently?”

AI didn’t suddenly replace multimedia workflows.

It integrated slowly, first as assistants, then as accelerators, and now as core infrastructure.

Today, it’s normal for a single project to include:

For teams building modern digital experiences, AI isn’t just a shortcut anymore. It’s becoming part of how brands are designed, launched, and scaled.

As a company who naturally approaches projects through storytelling, whether that’s brand narratives, user experiences, or product ecosystems, what stands out in 2026 isn’t just how good AI content looks. It’s how much faster ideas can move from concept -> system -> execution.

One of the clearest examples of this shift happened to me while working on a music video.

We had a transition planned; a slow zoom pan shot of someone smoking, cutting into a micro shot of the cigarette, then transitioning into the next scene.

The problem was simple; we didn’t have the time, we didn’t have the micro equipment, and we were already behind schedule.

Normally, that shot would have been cut or replaced with something less ambitious. Then someone on our team said, “Don’t worry, I’ve got it.” Most of us were skeptical. The next day, he came back with the finished shot, generated and blended using Higgsfield AI. What surprised us wasn’t that the shot existed.

It was how well it worked inside the edit. The transition moved from our real footage into the AI micro shot back into real footage again in the next scene, and it felt seamless. No visual break. No obvious “AI moment.” Just a shot that solved a production problem. That said, Higgsfield is not always ideal for every project, results can vary depending on complexity, length, and workflow needs. In this case, it worked perfectly as a targeted solution for a specific shot, but teams should evaluate AI tools carefully to see which fit reliably into their production pipelines.

But this is when AI stops being a trend and starts becoming infrastructure.

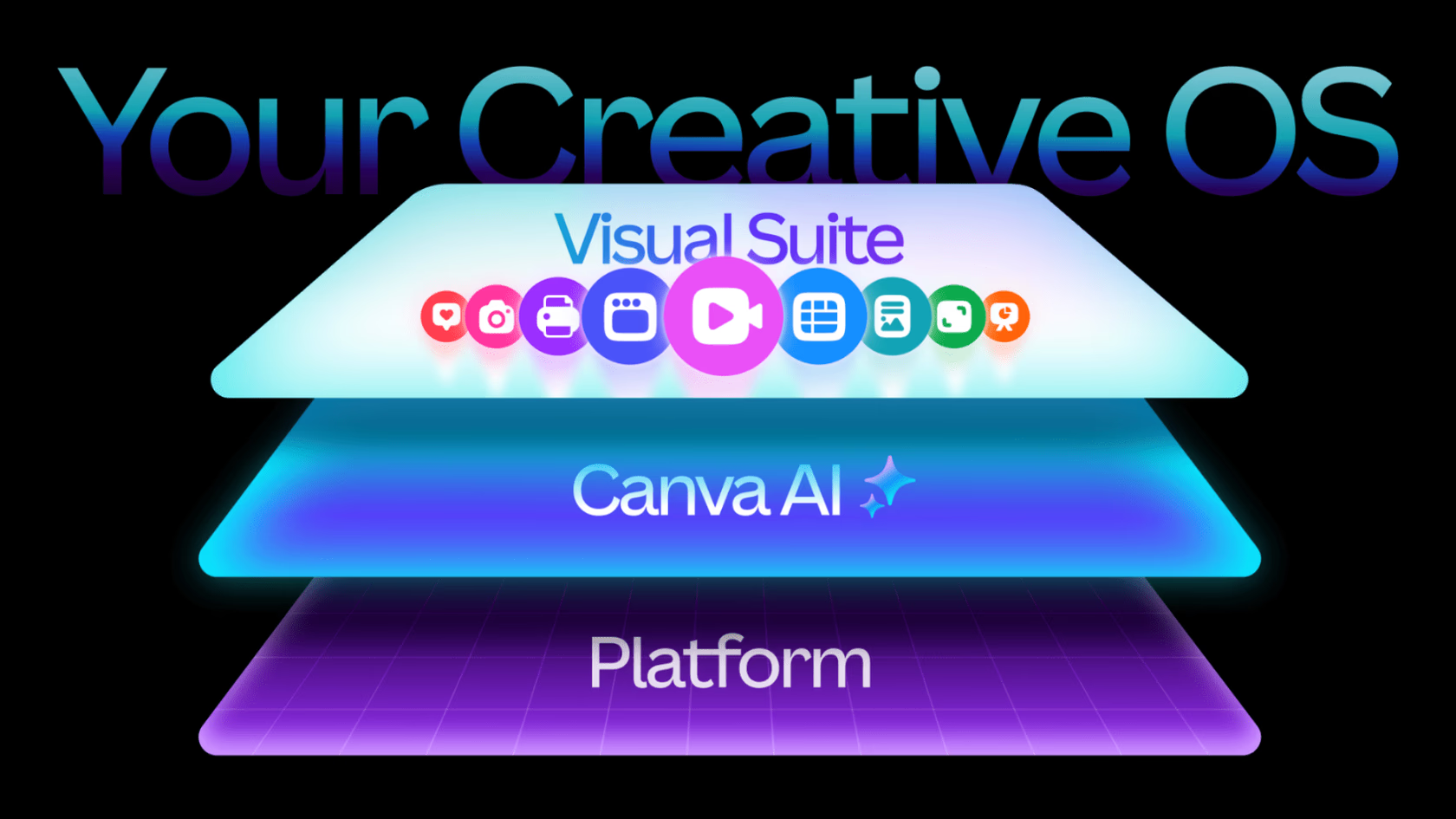

The biggest change happening right now isn’t about any single AI tool. It’s about how tools are starting to connect.

New platforms are emerging to unify multiple AI models into collaborative creative environments, aiming to handle everything from ideation to distribution inside one workflow.

Production environments are also shifting toward unified “infinite canvas” workflows, connecting video, image, voice, and editing pipelines into one place. Creative production is becoming less about individual outputs and more about connected creative systems.

This is especially relevant for agencies and product teams building full digital ecosystems, where brand, content, and software need to evolve together.

Adobe continues to dominate commercial creative workflows by embedding generative AI across Creative Cloud, including image generation, generative fill, and early image-to-video workflows.

The advantage here isn’t just generation quality. It’s continuity across the full design pipeline.

Modern image AI tools now support:

For brand-focused teams, consistency matters more than raw output quality.

Tools like Runway now generate high-quality video with strong motion and prompt accuracy, making them usable for real marketing production.

Concept videos are increasingly close to final deliverables. Runway is now also partnered with Lionsgate, yes the film production and distribution company responsible for films such as; Hunger Games, John Wick and Twilight.

This shows the direction AI is moving in the film industry.

Veo-class models are pushing toward full scene generation, video plus synchronized audio. This changes how pre-production works. Storyboards, rough cuts, and concept visuals can come from the same pipeline.

Cinematic AI video tools like Luma.AI now support HDR and professional color workflows, allowing AI video to integrate directly into film and commercial post pipelines.

Script -> storyboard -> shot composition -> video generation. For teams that lead with story rather than assets, this is a major shift.

The next wave isn’t individual tools.

Its platforms coordinate multiple AI systems inside one production environment.

These aim to unify:

Production is shifting from execution to orchestration.

As AI lowers the barrier to creating visuals and video, the differentiator shifts.

Not to who can generate content fastest but to who can make content feel intentional, connected, and meaningful.

The competitive advantage is moving toward:

AI can generate assets. But it still needs someone to define:

1. Real-Time Interactive Media

Content that adapts based on user behavior.

2. Fully Connected Creative Pipelines

Ideation -> Production -> Distribution -> Analytics -> Optimization

All connected through AI feedback loops.

3. Creative Teams Become System Designers

The role shifts from making assets to designing how assets are generated and deployed at scale.

AI isn’t eliminating creative teams.

It’s eliminating:

The companies pulling ahead are treating creative, technology, and automation as one ecosystem.

The future of multimedia won’t be defined by who has access to the best tools. It will be defined by who understands how to connect: Story -> System -> Scale.

This is where companies like WD Strategies are focusing, not just on producing content, but on designing how brand, software, automation, and storytelling work together as one digital growth system.

Because sustainable growth doesn’t come from more content. It comes from better experiences, better systems, clearer narratives, and the teams that can engineer all three together won’t just adapt to AI… they’ll help define how it’s used.